Electron Physician. 2014 Apr-Jun; 6(2): 814–815.

Published online 2014 May 10. doi: 10.14661/2014.814-815

PMCID: PMC4324277

Citation Frequency and Ethical Issue

Dear Editor:

I read your publication ethics issue on “bogus impact factors” with great interest (1).

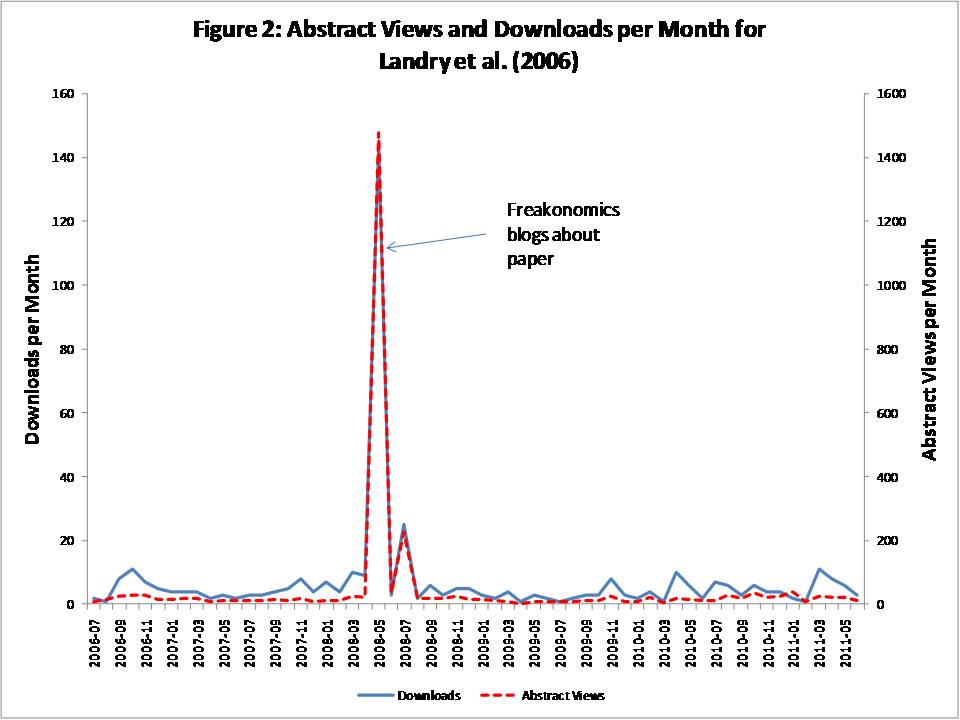

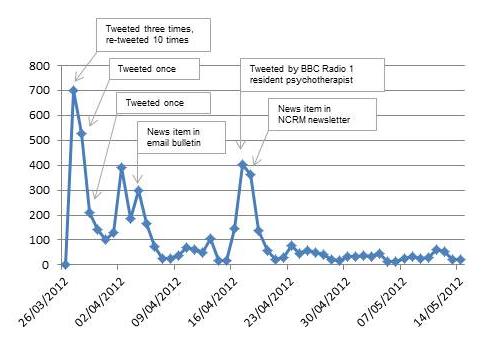

I would like to initiate a new trend in manipulating the citation

counts. There are several ethical approaches to increase the number of

citations for a published paper (2). However, it is apparent that some manipulation of the number of citations is occurring (3, 4). Self-citations, “those in which the authors cite their own works” account for a significant portion of all citations (5).

With the advent of information technology, it is easy to identify

unusual trends for citations in a paper or a journal. A web application

to calculate the single publication h-index based on (6) is available online (7, 8). A tool developed by Francisco Couto (9)

can measure authors’ citation impact by excluding the self-citations.

Self-citation is ethical when it is a necessity. Nevertheless, there is a

threshold for self-citations. Thomson Reuters’ resource, known as the

Web of Science (WoS) and currently lists journal impact factors,

considers self-citation to be acceptable up to a rate of 20%; anything

over that is considered suspect (10).

In some journals, even 5% is considered to be a high rate of

self-citations. The ‘Journal Citation Report’ is a reliable source for

checking the acceptable level of self-citation in any field of study.

The Public Policy Group of the London School of Economics (LSE)

published a handbook for “Maximizing the Impacts of Your Research” and

described self-citation rates across different groups of disciplines,

indicating that they vary up to 40% (11).

I would like to initiate a new trend in manipulating the citation

counts. There are several ethical approaches to increase the number of

citations for a published paper (2). However, it is apparent that some manipulation of the number of citations is occurring (3, 4). Self-citations, “those in which the authors cite their own works” account for a significant portion of all citations (5).

With the advent of information technology, it is easy to identify

unusual trends for citations in a paper or a journal. A web application

to calculate the single publication h-index based on (6) is available online (7, 8). A tool developed by Francisco Couto (9)

can measure authors’ citation impact by excluding the self-citations.

Self-citation is ethical when it is a necessity. Nevertheless, there is a

threshold for self-citations. Thomson Reuters’ resource, known as the

Web of Science (WoS) and currently lists journal impact factors,

considers self-citation to be acceptable up to a rate of 20%; anything

over that is considered suspect (10).

In some journals, even 5% is considered to be a high rate of

self-citations. The ‘Journal Citation Report’ is a reliable source for

checking the acceptable level of self-citation in any field of study.

The Public Policy Group of the London School of Economics (LSE)

published a handbook for “Maximizing the Impacts of Your Research” and

described self-citation rates across different groups of disciplines,

indicating that they vary up to 40% (11).

Unfortunately,

there is no significant penalty for the most frequent self-citers, and

the effect of self-citation remains positive even for very high rates of

self-citation (5). However, WoS has dropped some journals from its database because of untrue trends in the citations (4).

The same policy also should be applied for the most frequent

self-citers. The ethics of publications should be adhered to by those

who wish to conduct research and publish their findings.

there is no significant penalty for the most frequent self-citers, and

the effect of self-citation remains positive even for very high rates of

self-citation (5). However, WoS has dropped some journals from its database because of untrue trends in the citations (4).

The same policy also should be applied for the most frequent

self-citers. The ethics of publications should be adhered to by those

who wish to conduct research and publish their findings.

References

1. Jalalian M, Mahboobi H. New corruption detected: Bogus impact factors compiled by fake organizations. Electron Physician. 2013;5(3):685–6. doi: 10.14661/2014.685-686. [Cross Ref]

2. Ale

Ebrahim N, Salehi H, Embi MA, Habibi Tanha F, Gholizadeh H, Motahar SM,

et al. Effective Strategies for Increasing Citation Frequency. International Education Studies. 2013;6(11):93–9. doi: 10.5539/ies.v6n11p93. http://opendepot.org/1869/1/30366-105857-1-PB.pdf. [Cross Ref]

Ebrahim N, Salehi H, Embi MA, Habibi Tanha F, Gholizadeh H, Motahar SM,

et al. Effective Strategies for Increasing Citation Frequency. International Education Studies. 2013;6(11):93–9. doi: 10.5539/ies.v6n11p93. http://opendepot.org/1869/1/30366-105857-1-PB.pdf. [Cross Ref]

3. Mahian O, Wongwises S. Is it Ethical for Journals to Request Self-citation? Sci Eng Ethics. 2014:1–3. doi: 10.1007/s11948-014-9540-1. [PubMed] [Cross Ref]

4. Van Noorden R. Brazilian citation scheme outed. Nature. 2013;500:510–1. doi: 10.1038/500510a. http://boletim.sbq.org.br/anexos/Braziliancitationscheme.pdf. [PubMed] [Cross Ref]

5. Fowler JH, Aksnes DW. Does self-citation pay? Scientometrics. 2007;72(3):427–37. doi: 10.1007/s11192-007-1777-2. [Cross Ref]

6. Schubert A. Using the h-index for assessing single publications. Scientometrics. 2009;78(3):559–65. doi: 10.1007/s11192-008-2208-3. [Cross Ref]

7. Thor

A, Bornmann L. The calculation of the single publication h index and

related performance measures: a web application based on Google Scholar

data. Online Inform Rev. 2011;35(2):291–300.

A, Bornmann L. The calculation of the single publication h index and

related performance measures: a web application based on Google Scholar

data. Online Inform Rev. 2011;35(2):291–300.

8. Thor

A, Bornmann L. Web application to calculate the single publication h

index (and further metrics) based on Google Scholar 2011 [cited 2014 3

May]. Available from: http://labs.dbs.uni-leipzig.de/gsh/

A, Bornmann L. Web application to calculate the single publication h

index (and further metrics) based on Google Scholar 2011 [cited 2014 3

May]. Available from: http://labs.dbs.uni-leipzig.de/gsh/

9. Couto F. Citation Impact Discerning Self-citations 2013 [cited 2014 3 Ma]. Available from: http://cids.fc.ul.pt/cids_2_3/index.php.

10. Epstein D. Impact factor manipulation. The Write Stuff. 2007;16(3):133–4.

11. Public Policy Group L. Maximizing the impacts of your research: a handbook for social scientists. London School of Economics and Political Science; London, UK.: 2011. http://www.lse.ac.uk/government/research/resgroups/lsepublicpolicy/docs/lse_impact_handbook_april_2011.pdf.

Citation Frequency and Ethical Issue

Source:

Source:  Source:

Source: